Expired certification issue on development machines

Fix Certificate Issues on Development Machines

Issue/symptom

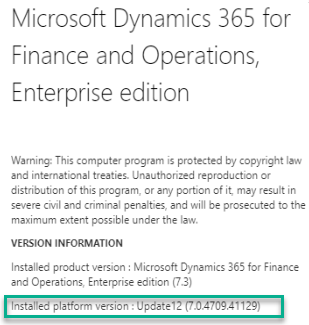

After running smoothly for long time. One day I got the below message while trying to connect to d365FO.

Analysis

There are many reasons possible for the above message, so the fastest way would be to look at Event Viewer. From below figure, it shows the reason of ExpiredCertificateException with the expired certicate id.

Solution

For this case, we can replicate the expired certificates and set the new expiration period on the new one. The steps are here.

1) Launch "Manage computer certificates"

2) Find the expired certificates. In this case, we need to changes the 4 certificates in the below figure.

3) Double-click on a expired certificate, then copy the thumbprint value.

4) Replicate the certificate with windows power shell (run as admin).

Paste these commands.

Set-Location -Path "cert:\LocalMachine\My"

$OldCert = (Get-ChildItem -Path 4C82C05E452D08A2BE1CC4F92DA24CF98E493F1D)

New-SelfSignedCertificate -CloneCert $OldCert -NotAfter (Get-Date).AddMonths(999)

And then copy or save the new thumbprint value.

B21B106BA4E0F7B090BEA027529C4D2E8D63F281

5) Refresh the "Manage computer certificates" again, then you see the replicated one.

6) Replace the old thumbprint with the new created on the files in C:/AOSService/webroot

7) Repeat step 3-6 for the rest expired certificates.

8) Finally, reboot the VM. After restarted, wait until all related services are running, then connect to d365FO again.

Thanks for reading. Until the next post!